An exclusive interview with Jaime Jaramillo, Director of Commercial Services, Xona Space Systems. For more exclusive interviews from this cover story, click here.

It has been said that “the only alternative to a GNSS is another GNSS”. Your website’s homepage claims that Xona will be “[t]he next generation of GNSS.” Will it provide all the positioning navigation and timing services that the four existing GNSS provide?

JJ: The answer at a high level is “Yes, it will provide all the services that legacy GNSS provides.” GPS has a military service called SAASM/M-Code. Since we’ve designed our constellation and signals from the ground up, we will be providing our signals in an encrypted format. We’ve designed into the system the commercial equivalent of M-Code, which will be an encrypted signal using strong encryption algorithms, that are commercially available.

For modern applications, we’re going to be able to provide all the services that GNSS provides plus a couple of additional benefits. Pulsar, which is the name of our service, will transmit secure signals. For autonomous applications, security is very important. If you’re riding in an autonomous car, you certainly don’t want somebody to be able to spoof the GNSS signal and veer your car off course. With commercial-grade encryption, we’ll be able to virtually eliminate that type of situation.

So, the short answer to my question is, “Yes. All of that, and then some.”

JJ: Yes, all of that. The traditional GNSS constellations provide some sort of secure signal for defense and military applications, Pulsar will offer the same thing for commercial applications.

How many satellites and orbital planes will the full constellation have?

JJ: The target is approximately 300 satellites. That will include a small number of spares. There will be about 20 orbital planes and a combination of polar and inclined orbits.

So, about 15 satellites per plane?

JJ: Roughly, yes.

When all the satellites are up, their locations and broadcast frequencies will be public, right? They will have to be disclosed to various regulatory bodies.

JJ: You hit it on the head. Because we’re in the process of going through all the regulatory approvals, we can’t talk a lot about our frequencies and a lot of the specifics. Once we get the approvals, then we will make an ICD publicly available.

Roughly, when do you expect to achieve initial operational capability (IOC)? And when do you expect to achieve full operational capability (FOC)?

JJ: Unfortunately, the answer is, “it depends.” As you can imagine, it is expensive to put up all 300 satellites. We’re a startup, a commercial company, not a government. So, we’re working diligently on funding. Our target now to launch our first satellites into operation is the end of 2024 or beginning of 2025. We’ll have a phased roll-out. In our first phase, we’re going to offer services that only require one satellite in view — for timing services and GNSS augmentation. Then, as we roll out to phase two, we’ll be able to start to offer positioning services in mid-latitudes. As we move to phase three, it will include PNT and enhancements globally. We designed the constellation with polar orbits to provide much better coverage in the polar regions which will be an improvement over what GNSS provides today. That’s why our system has a combination of polar and inclined orbits.

With climate change and more traffic through the Arctic, that’s going to become more important.

JJ: Exactly. When we talk to potential customers today, that question comes up.

What will be the capabilities at various points between initial capability and full capability?

JJ: There will be three phases. The first one, when we can use one satellite in view; the second one, when there’ll be at least four satellites in view in the mid-latitudes and some in the polar regions some of the time; and the third one, when we have more than four satellites in view, basically anywhere on the globe.

When do you expect to complete your constellation?

JJ: Our target for full operational capability is the end of 2026 beginning of 2027.

So, two or three years to fill out the constellation.

JJ: It really depends on how the funding goes. We have basically locked down our signal and our hardware technology. Now, it’s a matter of getting enough funding to launch. If we get more funding sooner, then those dates will come sooner. If it takes longer to achieve the funding, then those dates may slip. But those are the targets that we have today.

Who will launch your satellites?

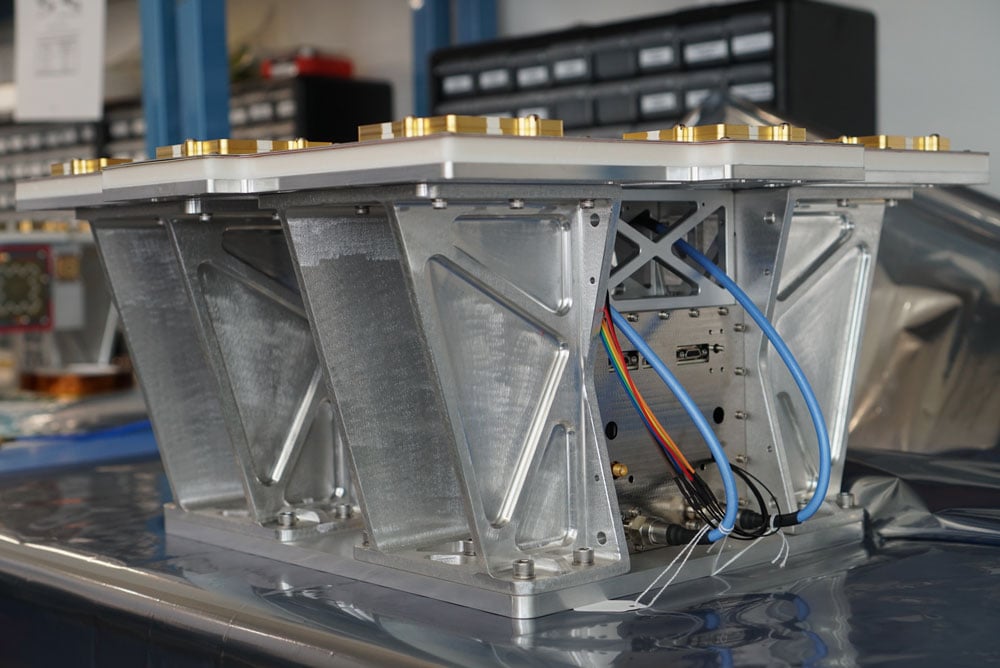

JJ: That decision has not been made yet, but the demo satellite that we have in space was launched last year in May on a SpaceX Falcon nine rocket.

What is your business model? Will you have different tiers of service? Will your rate structure enable mass adoption?

JJ: We are targeting both mass market applications and high-performance ones. LEO brings many benefits in comparison to MEO in just about every industry to which it can be applied. For the mass market applications, we are targeting a business model that includes what we call a lifetime fee: a customer or OEM pays a fee one time and the service works for the life of the device. If you’re using GNSS on your cell phone, you don’t want to have to pay to renew that every year. Nobody does that today and nobody’s expecting to do that in the future. Our lifetime fee model will apply usually for mass market applications. For higher performance applications that have more capabilities associated with them there’ll be different tiers, each with different services. We can provide all kinds of subscription plans.

What will be the differentiators between the different tiers?

JJ: The base service, initially, will be a timing service. Once we have enough satellites to provide four in view, we will expand that to a positioning service. Those will be the base services. We have bandwidth to transmit some data over the constellation and we could use it to provide enhancements. For example, if you make surveying receivers you could sell your customers enhancements services from the sky, rather than from a network. We’ll also be offering a service where, if security is very important, we’ll be able to provide all the keys for the different services over the air rather than over the internet or a network connection. That’ll be a separate service, with a separate fee.

Lastly, we are also planning to provide an integrity service that will verify that the signal has a certain level of performance thresholds. Critical applications that need certain levels of performance will be able to receive the signal. If it drops below certain performance thresholds, we will flag that to the device so that it knows that, even though it is receiving a signal, it should not continue to use it due to signal degradation.

With legacy GNSS, satellites in MEO broadcast signals to receivers. There’s no need for two-way communication and, anyway, transmitting to the satellites would require too much power. With LEO satellites, however, you need a lot less power from the ground to talk to the satellites. Would two-way communication benefit certain applications?

JJ: The initial service does not consider two-way capabilities today. However, we are leaving room in the signal and hardware designs to potentially offer that in the future.

Your business model is the exact opposite of the gift from U.S. taxpayers to the world that is GPS.

JJ: Yes, but there is value we offer that cannot be obtained from GPS

Who will build the receivers? Do you expect that “if you build it, they will come”?

JJ: We are in discussions with just about every tier one manufacturer out there. What’s publicly announced is that we have a strong relationship with Hexagon | NovAtel. They have been supportive of us for a long time now and are the most advanced in their development and support for our signals.

I assume that, at least for a transitional period of several years, we’re talking about adding Xona to the traditional GNSS on the receivers — just like, many years ago, we went from GPS-only to GPS and GLONASS, and then, more recently, to multifrequency receivers that use all the satellites in view. Would there be any reason, at some point, to have Xona-only receivers?

JJ: We have designed our signals to make it as easy as possible for receiver manufacturers to support them. We want to design the signal so that most receivers can support them with just with a firmware upgrade. Many receiver manufacturers ask the same question that you just asked. For certain applications, maybe Xona Pulsar-only makes sense or maybe it’s just GPS and Xona or GPS and some other constellation and Xona. There are initiatives looking at all these scenarios but most of them today are GNSS plus Xona as a complement. However, there are those that are also looking at potentially Xona only.

It’s interesting what you said about firmware as opposed to needing new hardware.

JJ: Correct. Given that we’re a startup we want to facilitate that. For some of the advanced features — for example, encryption — the receiver needs much more horsepower. So, it depends on the receiver. Some very optimized ASIC types of receivers don’t have the horsepower to run an encryption engine.

Of course, that horsepower is increasing anyway…

JJ: Exactly. And there are other techniques, right? For example, some IoT receiver manufacturers are offloading a lot of the processing power to the cloud. So, the device is designed to have some sort of network connection. Then, if it needs to do heavy processing, it can do that in the cloud. That can be done in different ways. For future applications, some receiver manufacturers are looking to potentially add this capability to next generation receivers.

Of course, the cloud introduces some lag…

JJ: Right. It depends on the application. If it’s an IoT device or an asset tracker, maybe it’s not mission-critical. It just depends on the application.

What markets or applications are you targeting first?

JJ: Timing is a big area of focus for us for initial applications and potentially offering it depends on our partners. We are looking to partner with companies that will help us provide enhancements such as signal integrity. Those are the two services that we’re looking to provide first, because we can do that with one satellite in view.

Will the timing you provide be good enough for cell phone base stations? For television broadcasts? For financial transactions?

JJ: Based on our architecture, our system will provide better timing accuracy than what GNSS provides today, because we have several advantages. Our constellation architecture is patented. One of its key pieces is that our satellites are designed to use GPS and Galileo signals, as well as inputs from ground stations, for timing reference. Also, all our satellites will share their time amongst themselves. We will average all these timing inputs and build a clock ensemble on the satellites. That enables much higher accuracies than just having a few single inputs.

That raises a critical question, especially in the context of complementary PNT: will your satellites have their own atomic clocks or will they rely entirely on GNSS? If the latter, any problem with GNSS would also affect your system.

JJ: This was one of the key points that we kept in mind when we architected the constellation. It keeps its own clock ensemble. It uses the time between the satellites, as well as time inputs from GNSS and from the ground, as references, but the time is kept on the satellites themselves in this clock ensemble. So, that clock ensemble runs independently, even if you lose GNSS.

It will degrade…

JJ: Right, it won’t have the same accuracy as when it has all the inputs, but it will keep working because it’s functioning independently. If we have all the references, then the output is very, very high accuracy. If we lose some of those references, it’ll degrade, and continue to operate. That design — which makes it independent of GNSS — makes it a very resilient system. So, it is complemented by GNSS, but not dependent on it.

The devil’s in the details. What kind of frequency standard will be on the satellites? How fast will their time degrade? How long will it remain sufficiently accurate for certain applications?

JJ: A quartz clock is an atomic clock. I know where you’re going because I come from the timing industry. Since we’re a commercial company, one of the goals of the constellation design was to keep the cost of the satellites themselves as low as possible, so that we can deploy them at a low cost. We will leverage the very high-quality atomic clocks in GNSS satellites and ground stations in which governments have already invested. Each GPS satellite has two rubidium clocks and one cesium clock. The type of oscillator that we use costs much less to keep the satellite cost down, but that is the advantage of the clock ensemble. Also, if you look at our architecture, all the satellites talk to each other. So, the time is being shared between many different oscillators and they’re taking inputs from GNSS and from ground stations. So, that clock ensemble becomes very strong, because it has many inputs from the adjacent satellites and from the different references.

If GPS goes down entirely, we’ll have bigger problems. Your system would continue to work and, even if degraded, will be a lot better than nothing. Your architecture, however, leaves room for people to say that we also need ground-based systems.

JJ: That’s a really good point. The idea of having another LEO-based constellation is to take advantage of what can be done in LEO for GNSS. It’s not intended to replace ground-based systems or alternative systems. If you want the most resilient time and position, you need to use a combination of everything. GNSS alone will not give you the best combination. We always like to say that we’re complementing GNSS.

Jan, what is the role of simulation in building a new GNSS with a very different constellation and very different orbits than existing ones?

JA: Before the Xona constellation or any other emerging constellation has deployed any satellites, simulation is the only way for any potential end-user or receiver OEM to assess its benefits. Before you can do live sky testing, a key part of enabling investment decisions — both for the end users as well as the receiver manufacturers, and everybody else — is to establish the benefits of an additional signal through simulation. Once it’s all up there and running, there are still benefits to simulation, but then there’s an alternative. Right now, there really isn’t an alternative to simulation.

With existing GNSS, you can record the live sky signals and compare them with the simulated ones. It’s a different challenge when it’s all in the lab or on paper.

JA: Yes, but it is not an entirely novel one, at least to us at Spirent. We went through it with other constellations and signals — for example, with the early days of Galileo. It’s often the case that ICDs or services are published before there is a live-sky signal with which to compare them. So, we do have mechanisms in terms of first generating it from first principle, putting out the RF, running tests with that RF, and then seeing that what we put out is what we expect based on our inputs and the ICD. Obviously, we always work off the ICD, which is essentially our master. Then, a lot of work needs to happen to turn what’s written in the ICD into an actual full RF signal, overlay motion, and all those things. So, we have a well-established qualification mechanism to make sure that whole chain works for signals when we don’t have a real-world constellation.

Another very important check is when you work with some of the leading receiver manufacturers who have done their own implementation and you bring the two things together and see if they marry up. Then there’s always a bit of interesting conversation happening when things don’t line up, but we have a lot of experience in resolving that. So, there’s the internal (mathematical) validation of things — which we do before we bring something to market — and then there is validation with partners, be they the constellation developer or a receiver manufacturer, or both.

JJ: Then, one step further from the receiver manufacturers, what we call the OEMs, want to validate that the receiver is doing what it’s supposed to do. The best way to do that is with a simulator. You can try to get a live sky signal, but it can be difficult. You must get on a roof. It may not have an optimal environment for that. The best way to prove that in a controlled environment is with a simulator. Spirent works with two levels of customers: first, the receiver manufacturers, then all the application vendors or OEMs that use those receivers.

JA: What we’ve done with the SimXona product recently follows very closely along those lines. First, we did validation ourselves. Then, we worked in a close partnership with Xona for them to certify that against some of their own developments. So, we follow that same proven development approach. It’s just that, in this case, the signal comes out of a LEO.

What is the division of labor here between Spirent Communications and Spirent Federal? In particular, which device comes into play with Xona?

PC: Spirent Federal has provided support to Xona but the equipment is the COTS equipment provided from the UK by Spirent Communications.

JA: This Xona product does not currently implement any restricted technology only accessible through Spirent Federal. That is very much the case, especially for the aspects of secure GPS, for which we have the proxy company, Spirent Federal. However, the SimXona product is a development through Spirent Communications, albeit heavily aided by Spirent Federal, from a technical perspective and others, but there are no Spirent-Federal-specific restricted elements to SimXona or the current Xona offering.

PC: If we ever had to go into a U.S. government facility to demonstrate SimXona or to sell it to them, that would be Spirent Federal that would be involved.